This is one of a series of inter-related articles on learning and training; if interested in this subject, please check other blog posts.

Every self-respecting educational professional has heard of Benjamin Bloom’s Taxonomy of Learning Domains. Most of us distinguish between gained knowledge, enhanced understanding and improved application.

Bloom’s model however consists of three learning domains: cognitive, affective and psychomotor, more commonly known as the knowledge, attitude and skills domains[1]. A summary table is shown below:[2]

| Cognitive | Affective | Psychomotor |

| Knowledge | attitude | skills |

| 1. Recall data | 1. Receive (awareness) | 1. Imitation (copy) |

| 2. Understand | 2. Respond (react) | 2. Manipulation (follow instructions) |

| 3. Apply (use) | 3. Value (understand and act) | 3. Develop Precision |

| 4. Analyze (structure/elements) | 4. Organize personal value system | 4. Articulation (combine, integrate related skills) |

| 5. Synthesize (create/build) | 5. Internalize value system (adopt behavior) | 5. Naturalization (automate, become expert) |

| 6. Evaluate (assess, judge in relational terms) |

Most general literature on learning tends to consider only the cognitive domain. Hence the common misunderstanding that application (cognitive level 3) is a measure of skills, whereas it is still part of the knowledge domain and meant to gauge the application of concepts in real-life circumstances.

Also, when looking at the complete table above, one can easily see that (short term) training will mostly have an effect on levels 1 and 2, with perhaps very sporadic results on level 3, of the cognitive domain.

The tools discussed in this blog measure improvements on level 1 and 2 of the cognitive domain.

A simple measurement technique

In one of the 5-day project management training programmes I used to facilitate in South Africa, we used to include 2 knowledge tests (one at the start and one at the end to measure knowledge differential) but also two comprehension tests. The comprehension tests consisted of a ranking exercise, where activities in the project management process are to be ranked in order of priority.

The two knowledge tests were different tests, while the ranking exercise was the same, but was completed individually at the beginning, and as a team at the end.

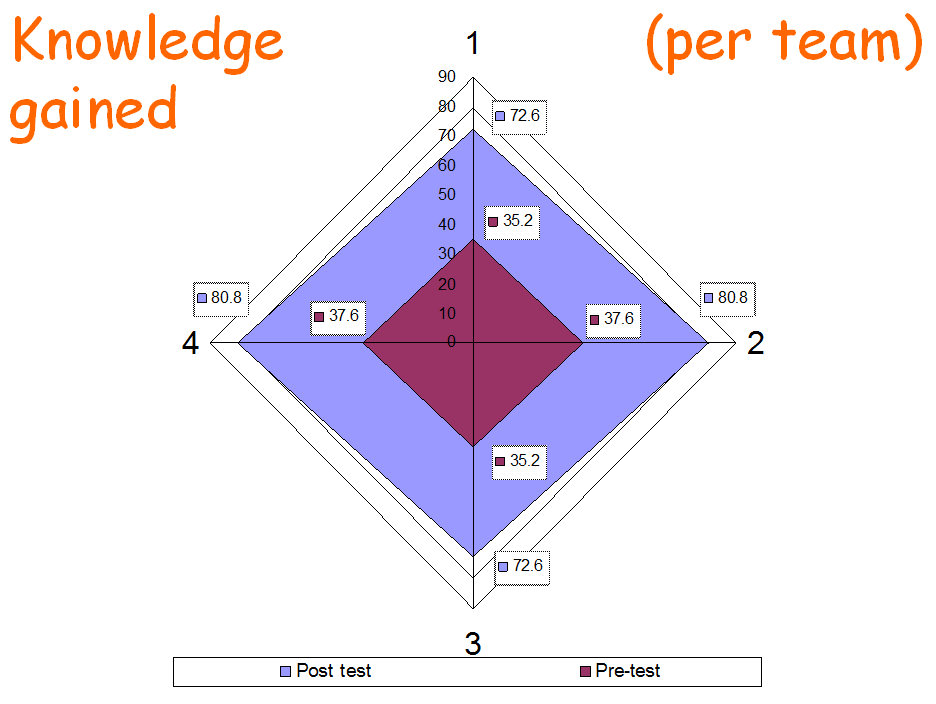

The above graph shows the average knowledge test scores of the pre-test and post test per team, for the four teams in the group (the tests were individual). It is obvious that the knowledge gain was substantial.

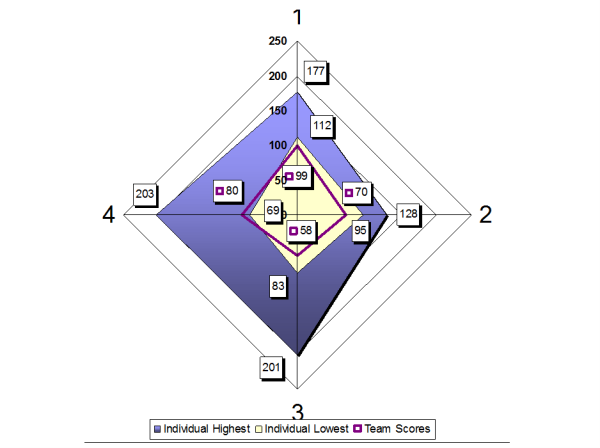

This second graph shows the results from the ranking exercise. The test was measuring variance from the expert opinion, so the lower the score, the better the result.

The pre-test result is shown as a bandwidth (in blue) of individual scores with the highest and lowest scores labelled; whereas the end test result is shown as the team score (purple line), as the test was done collectively as a team.

Again the improvement in results is obvious, but also notable are the much more evenly distributed team scores in the group.

What does this tell us?

That, over and above the much improved knowledge of project management terminology and concepts, teams in the group had a much more consistent understanding of the logic of the project management process by the end of the course.

Furthermore the teams applied some of the learned concepts in completing their team project assignments, which was visible in their final presentations, so some result on cognitive level 3 was evident. Each individual’s contribution to the team effort was however not measured.

It has to be noted that on occasion, some individual pre-scores were better that the team end score (see team 4, best individual score 69 and team score 80). This we found was either due to pre-test fluke, or the participant was indeed certain of the ranking but failed to convince the group. This in turn was often due to the team dynamics at the time of the test.

However, rather than see this as a receding result, one could argue that more important than accuracy is the development of a common understanding, as it leads to common purpose, which in turn would open the door to (but not guarantee) improved performance in the workplace.

It is tempting to also conclude that levels 2 on both the attitudinal domain (respond to a situation) and the skills domain (follow instructions) had been attained. However, as skills and especially attitude are contextual and performance in a training room is not necessarily a gauge for workplace behaviour, we will forego these conclusions for now.

Closing Comments

The purpose of this blog was not to state the obvious, namely that training outcomes are measurable, especially at the knowledge and comprehension levels. I hope it has illustrated that these criteria are far from indicative of the full potential of learning outcomes in terms of knowledge, skill, and attitude.

Moreover, the question of whether good learning outcomes necessarily lead to benefits such as beneficial behaviour change in the workplace, cannot be established from the classroom. Benefit measurement is therefore an obligatory organizational task and needs to be managed as such. Being “real life”, this also requires a more complex and sensitive kind of assessment technique. The acceptability of such practice is again determined by the organizational maturity.

[1] The skills domain was not fully developed by Bloom himself, and a number of later researchers completed three different ones. For the purpose of this discussion, the model propagated by Dave is chosen

[2] www.businessballs.com: Benjamin Bloom’s Taxonomy of Learning Domains – Cognitive, Affective, Psychomotor Domains – design and evaluation toolkit for training and learning

so nice and thanks for your knoweldge plz ineed all details and how ican know the swot if my student my emile miqdai@yahoo.com and plz how we can learn your idea to others my regards

Thank you Mr. Houssam for sharing th above article. It is one of the most interesting subjects I read on the Learning results & how the outcome can effect the individual.